Introduction

When it comes to apps with a lot of data, some SQL queries take more time than expected, especially when we want to search text through several model attributes. This most commonly happens with e-commerce stores, but these kinds of complex queries can happen in social media apps or any query that makes suggestions based on user information or interaction with the platform.

In these cases, we want to retrieve data from our database given a query a user made.

It would be reasonable to wait for a user query containing fields from different models’ attributes and in these cases queries start to get complex and inefficient. What happens when our model's attributes and their relationships start to grow? How do we manage user typos in those queries? What happens when a user looks for ‘ps4’ instead of ‘playstation 4’?

We’ll try to answer this along with more questions about using Elasticsearch in a Ruby on Rails app.

In this tutorial, we will assume you have a basic understanding of Ruby on Rails applications, have Elasticsearch server installed, and a Rails app we will integrate it with. If this is not the case, you can follow the official Elastic documentation and use our awesome Ruby on Rails boilerplate).

But first… What is Elasticsearch?

Elasticsearch is described as a "distributed, RESTful search and analytics engine capable of addressing a growing number of use cases. (...) it centrally stores your data for lightning-fast search, fine‑tuned relevancy, and powerful analytics that scale with ease” and in my humble opinion that’s pretty true due to its scalable and distributed architecture.

This open source project was built under the full-featured text search engine Apache Lucene and there is where most of the efficient magic happens.

In a nutshell, we’ll use Elasticsearch as a secondary database (a non-relational one), taking advantage of its text pre-processing and indexing power.

A small disclaimer

Although there are lots of interesting and useful gems in Rails to work with Elasticsearch like chewy, searchkick or search_flip (which I encourage you to take a look at), in this project we are going to use the two basic libraries provided by the Elasticsearch team: elasticsearch-rails and elasticsearch-model. These two gems will let us both interact with the Elastic server instance using an ActiveRecord-like syntax without having to write all the specific DSL and also update our records in the Elastic instance so we always keep as synchronized as possible with our relational database.

Let’s get down to work!

Tutorial to Build a Microblogging Searcher

We’re gonna build a simple microblogging searcher, where we are going to make it possible to find posts despite potential typos and highlighting the matching results really fast and performantly. For this, we will use text analyzers and tokenizers (natural language processing tools that come out of the box with Elastic.

Step 1: Including required gems

Add to your Rails Gemfile the two Elastic gems:

and install them running bundle install.

Step 2: Adding Elastic behaviour to our model

Given a simplified [.c-inline-code]Post[.c-inline-code] model with only a [.c-inline-code]title[.c-inline-code], [.c-inline-code]body[.c-inline-code] and a [.c-inline-code]topic[.c-inline-code], include the next lines into it:

The first [.c-inline-code]include[.c-inline-code] will let us make Elasticsearch calls in a friendly ActiveRecord-like syntax. This way we can, for example, just call [.c-inline-code]Post.search(query)[.c-inline-code] for searching. Otherwise, we should manually make an API call to the Elastic server instance every time we want to interact with our data.

The second [.c-inline-code]include[.c-inline-code] adds callbacks in our model, so every time we create, delete or edit a [.c-inline-code]Post[.c-inline-code], this action will be replicated into Elasticsearch data.

This last point is very important. Take into account we have two independent databases: one in which we’re gonna persist all the model data as you’re used to (Postgres) and this other new one for making fast searches, suggestions and use all other Elasticsearch fantastic features.

Sometimes (for performance or even business requirements), we don’t want to update data in Elasticsearch instantaneously every time a record has been changed in our main database (this is customizable but we won’t tackle this in the current article).

Step 3: Defining our Elasticsearch index

In Elasticsearch we don’t have tables or rows. Our models are stored in [.c-inline-code]indexes[.c-inline-code] and each instance is called [.c-inline-code]document[.c-inline-code]. Although Elastic can automatically detect the attributes types of our model, we are going to declare them explicitly so we have more control over them by adding the following to your model:

What are we doing here?

In the second line, with [.c-inline-code]dynamic: false[.c-inline-code] we are avoiding new fields to be created accidentally. If you choose [.c-inline-code]dynamic: true[.c-inline-code], every not-listed attribute will be automatically added in the index in an update or create query.

In the third line we are telling Elastic we want to take our [.c-inline-code]id[.c-inline-code] attribute and add it to the [.c-inline-code]Post[.c-inline-code]’ index as an index but without analyzing it. This means it won’t apply any filter or transformation to it.

In the fourth and fifth lines, we're adding both [.c-inline-code]title[.c-inline-code] and [.c-inline-code]body[.c-inline-code] as text type and with an English analyzer. This will automatically run text tokenizers, analyzers and will apply a lot of natural language processing tools every time we insert or edit a document in our index, making the searches faster, easier and typo-resistant (be aware all these things take extra time). Follow these links if you’re interested in learning more about language analyzers and tokenizers.

And last but not least, we are declaring [.c-inline-code]topic[.c-inline-code] as a keyword, meaning we are expecting this field only to be tokenized (and not processed or analyzed) making the inserts and edits faster. This is because we are not expected to search through this field, but feel free to play with different options.

Step 4: Starting our Elasticsearch instance and creating our index

Assuming we already installed Elasticsearch, we'll start an instance of Elastic by just executing in a terminal elasticsearch (remember I’m using homebrew). This will launch a server in the 9200 port by default. To check if everything is working as expected we can make a [.c-inline-code]GET[.c-inline-code] request with [.c-inline-code]curl "https://localhost:9200/"[.c-inline-code] (or could be just opening the URL in a browser).

The response should look something like this:

Now we have our Elastic instance up and running, let's open a Rails console and create the corresponding index by executing:

If you already had some posts created, you will need to reindex them by running the following:

This can take A LOT of time if you have too many records since Elastic has to analyze and tokenize all of them.

Step 5: define the search method we will call from the controller

In the Posts’ model, we'll define this basic method that will execute a query in our Elasticsearch instance, taking a string and looking in the specified fields.

This simple query will search through all our posts and retrieve them in order of importance. The ‘[.c-inline-code]^5[.c-inline-code]’ after [.c-inline-code]body[.c-inline-code] will make a match in this attribute to weigh 5 more times than one on [.c-inline-code]title[.c-inline-code].

Step 6: create your searches method in the controller, its view and routes.

My controller method looks just like this

Also, I did this simple view with a little of Bootstrap.

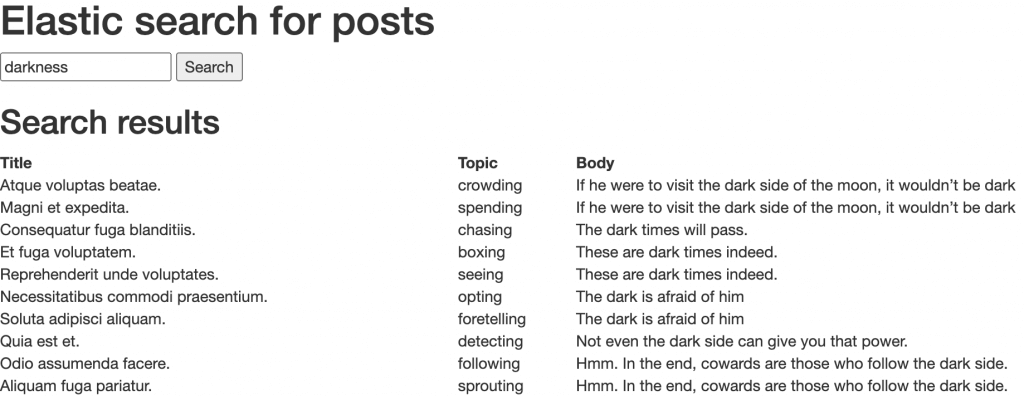

As you can see, I created a bunch of posts using faker.

Take a look into that search again… Don’t you see anything special? We looked for ‘darkness’ and although we don’t have any matching result, we can see that the ones containing ‘dark’ were displayed. This is because of the text analyzers. They know these two words are not the same but they do have similar semantics.

Pretty easy, wasn’t it?

What happens if we look for ‘dakr’ instead of ‘dark’?

Oops, no results. Let’s fix this.

Step 7: searching with typos and highlighting the match in searches’ results.

Our search will now look like this:

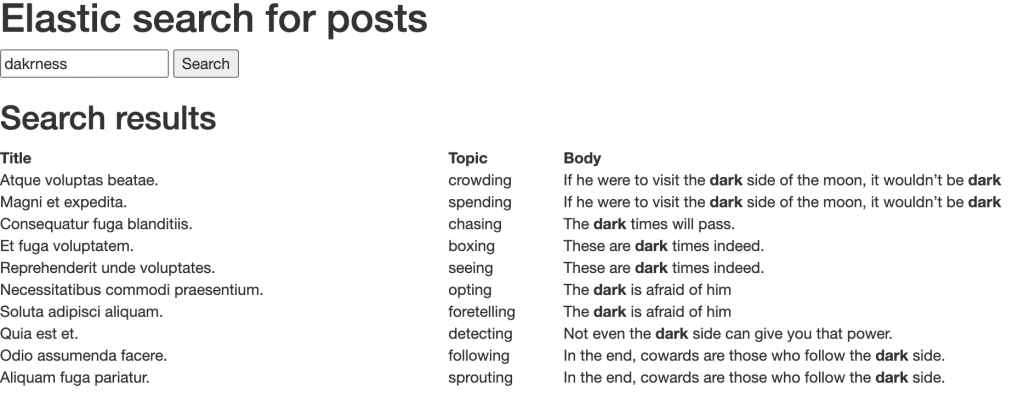

In this case, we are basically adding two new features: fuzziness and highlight.

With [.c-inline-code]fuzziness[.c-inline-code] we are making Elastic know that errors may appear in the query. This way, it will try to match similar words, so we now will not only cover semantic but syntactic errors too.

With [.c-inline-code]highlight[.c-inline-code] we are making the match to be highlighted by adding pre and post tags (in this case HTML bold, but you can add whatever you want).

Let’s now search for ‘dakrness’: a word that is neither in our database nor is well written.

Conclusions

Although in this post we’ve just covered the tip of the iceberg, we can see the potential of this search engine when it comes to full-text searches thanks to its cutting-edge natural language processing tools. Also, we could see how easy it is to integrate it to our Rails application using the official Ruby gems they provide.

But that's not all. Elastic also has several other applications. For example, we have been using it in some of our applications not only to admit typos and make searches smarter and faster but also to add synonyms to some list of words in our e-commerce apps where we expect searches like ‘ps4’ to match with ‘playstation 4’, ‘playstation four’, or ‘ipad’ to match ‘i pad’ or ‘i-pad’. It also has several interesting uses in geospacial queries including geodistances and geoshapes.

I encourage you to get lost into the wide Elastic documentation and discover the huge potential that it can add to our applications.